Quick update on the Text Arena leaderboard! We’ve just refreshed our standings with the latest ERNIE-5.0-Preview-1103 on LMArena. 🚀 ERNIE-5.0-Preview-1103 holds the top 20 in the most competitive Arena.

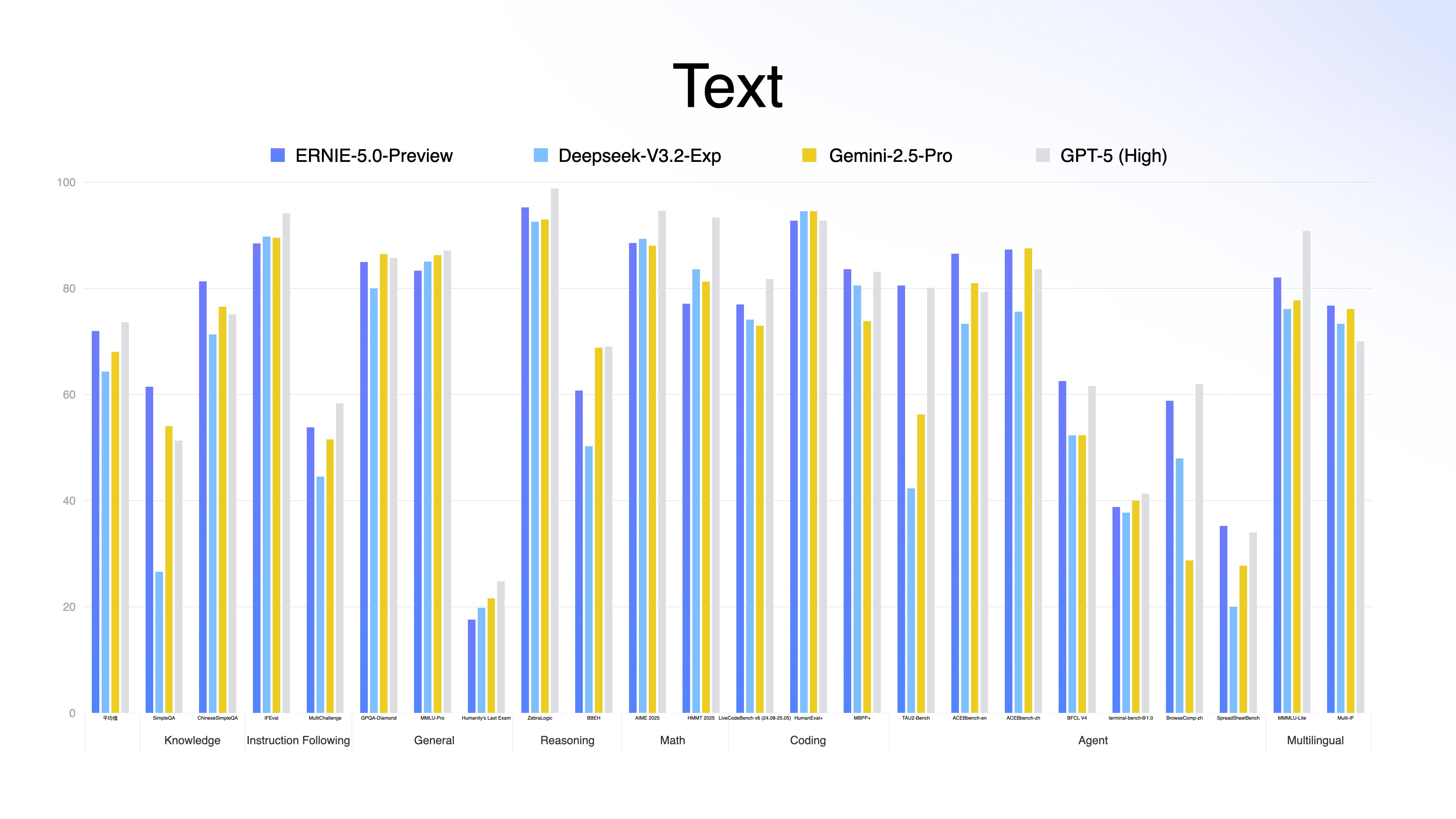

With upgraded foundational abilities, ERNIE 5.0 achieves state-of-the-art performance across various benchmark evaluations. This time on the Text Arena, ERNIE-5.0-Preview-1103 received 1471 in Software & IT Services—on par with GPT 5.1-high,and 1464 in Coding—matching chat-gpt-4o.

Model Introduction

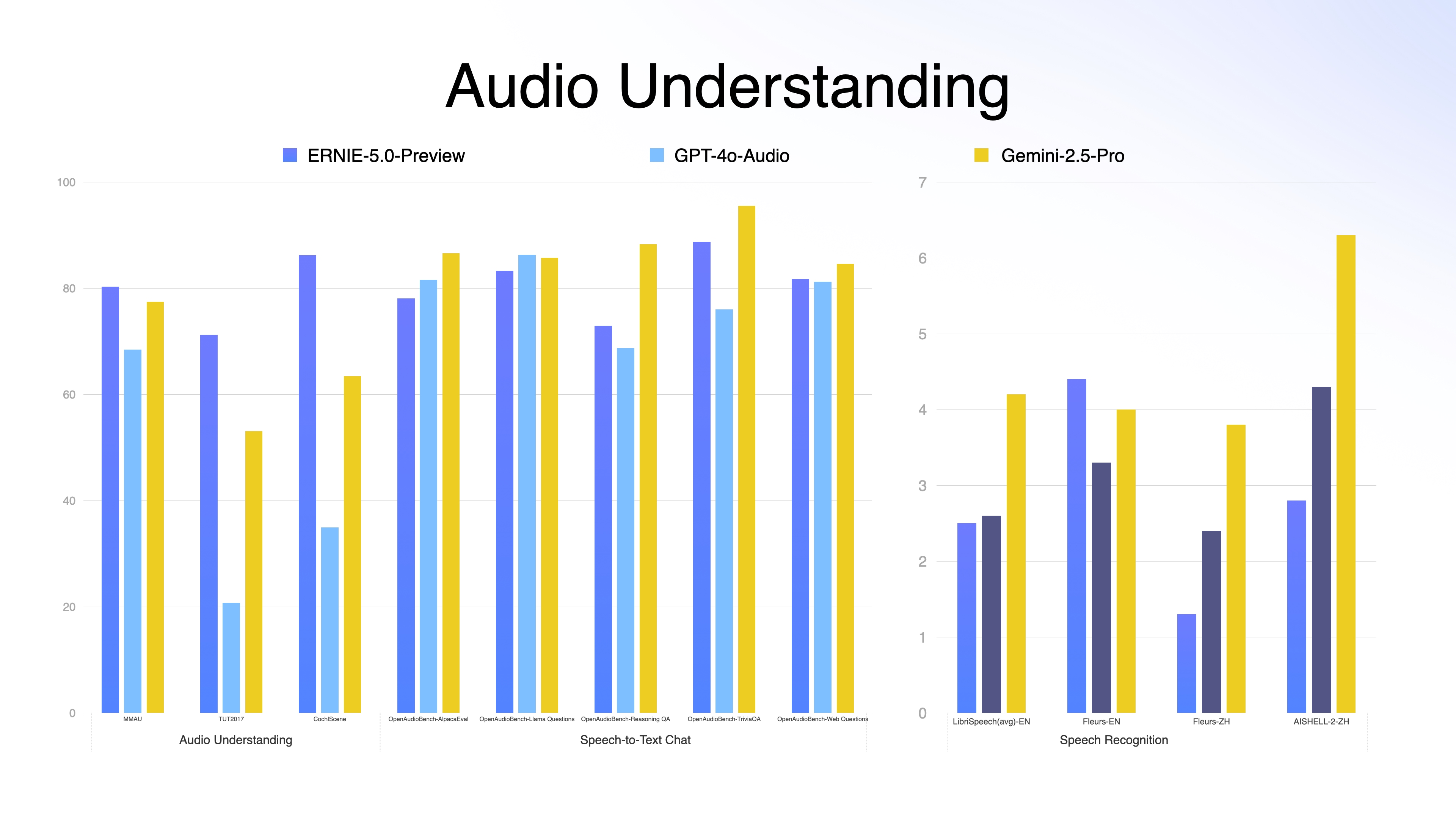

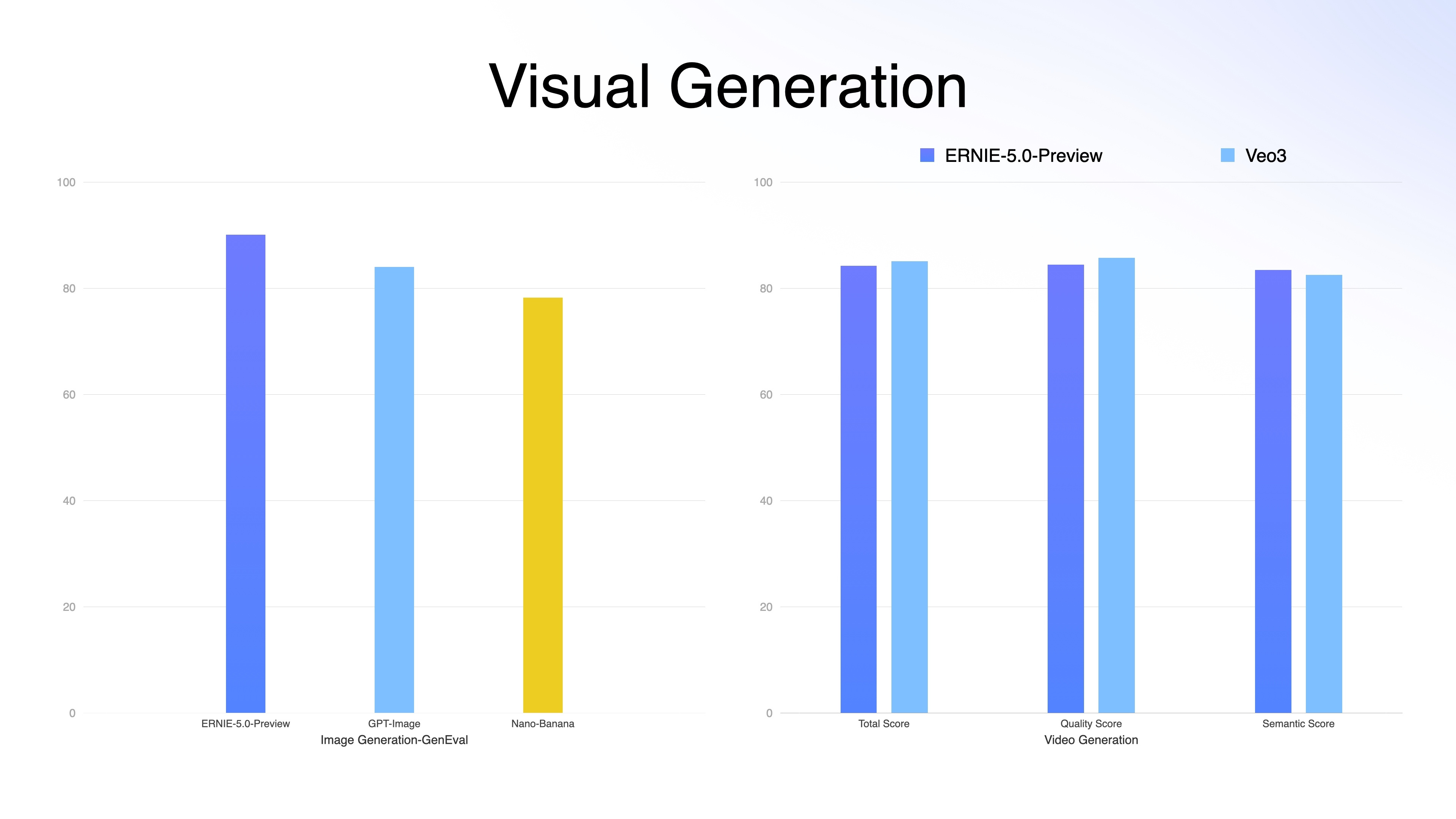

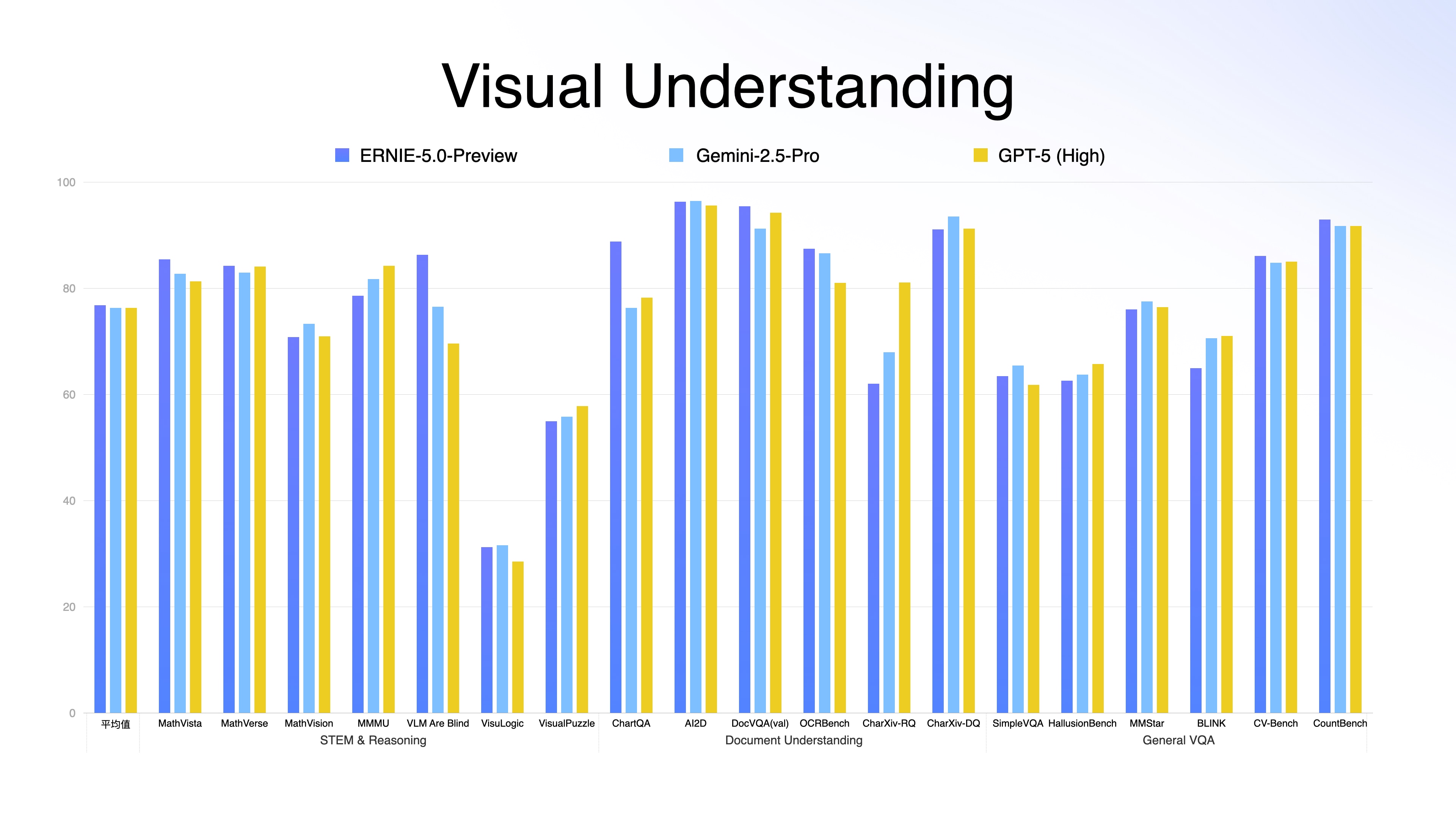

ERNIE 5.0 is the new-generation foundation model of the ERNIE series, built upon natively unified omni-modal modeling technology. From the ground up, it jointly models text, images, audio, and video, empowering comprehensive multimodal understanding and generation capabilities. With fully upgraded foundational abilities, ERNIE 5.0 achieves state-of-the-art performance across various benchmark evaluations—excelling particularly in multimodal understanding, instruction following, creative writing, factual reasoning, agentic planning, and tool use.

Technical Highlights

- Natively Omni-Modal Modeling: Unlike most multimodal models that rely on late fusion, ERNIE 5.0 integrates text, images, audio, and video data from the start of training. This enables seamless joint input and output across all modalities, achieving omni-modal understanding and generation.

- Unified Understanding and Generation: ERNIE 5.0 overcomes the long-standing challenge of unified multimodal understanding and generation through deep fusion of perceptual and semantic features across visual and auditory modalities. This enables seamless synergy between understanding and generation, marking a new leap in omni-modal intelligence.

- Unified Autoregressive Architecture: By discretizing training objectives across modalities and training under a single autoregressive framework, ERNIE 5.0 achieves deep feature fusion and collaborative optimization within one unified architecture. It significantly boosts omni-modal modeling capability and consistency.

- Massive-Scale Mixture of Experts (MoE) Architecture: Built on the PaddlePaddle deep learning framework, ERNIE 5.0 features over 2 trillion parameters, ranking among the largest publicly disclosed models globally. Its ultra-sparse expert design (with <3% active parameters) delivers powerful multimodal performance while dramatically reducing computation and inference costs.

- Agentic Capability Enhancement for Long-Horizon Tasks: By synthesizing long-horizon task trajectory data from large-scale real-world/simulated tool environments, we perform data augmentation during pre-training and post-training. The model is then trained end-to-end with multi-round reinforcement learning, leveraging Chain-of-Thought and Chain-of-Action. This approach significantly improves the model’s agentic and tool-use capabilities.