Introduction to FastDeploy 2.0

As large models such as the ERNIE 4.5 family continue to be open-sourced, interest in their inference performance and deployment efficiency has multiplied across both research and industry. FastDeploy 2.0, built on the PaddlePaddle framework, addresses this demand by offering an end-to-end toolkit for efficient deployment and high-performance inference of large models. The current release of FastDeploy supports several widely used open-source models and introduces a high-throughput inference architecture based on Expert Parallel (EP) and Prefill-Decode Disaggregation(PD). In benchmark tests with the ERNIE 4.5 model, FastDeploy 2.0 achieves 56K/21K tokens per second in input/output throughput. It also includes a near-lossless 2-bit quantization strategy, enabling trillion-parameter models to run on a single GPU. FastDeploy 2.0 is designed to reduce the complexity of deploying large models, improve inference efficiency, and optimize resource utilization, making it easier for researchers and enterprises to bring large-model applications into practical use.

Fastdeploy 2.0 Highlights

FastDeploy 2.0 achieves highly efficient inference deployment for large-scale models by the following key innovations:

- Unified interface support: FastDeploy 2.0 is compatible with the OpenAI API protocol and fully aligned with the vLLM interface, supporting both local and service-based inference modes, which assures easy integration and utilization.

- Integrated high-performance optimizations: The toolkit incorporates a range of inference acceleration techniques—including low-bit quantized operators, CUDA Graph, speculative decoding, context caching, segmented prefill, and Prefill/Decode Disaggregation—enabling ERNIE 4.5 models to achieve strong inference throughput.

- Extensive quantization support: FastDeploy 2.0 supports weight, activation, and KV cache quantization down to 8-bit, 4-bit, and 2-bit levels, allowing trillion-parameter models to run on a single GPU with minimized accuracy loss.

- Optimized for heterogeneous hardware: Inference is optimized across a wide range of hardware platforms, including NVIDIA GPUs, KUNLUNXIN P800, Iluvatar BI-V150, Hygon K100AI, and Enflame S60.

- Production-ready deployment features: For practical industrial scenarios, FastDeploy 2.0 includes traffic scheduling features such as real-time load-aware scheduling and distributed load balancing for scalable and stable deployment.

Performance and Benchmark Results

In addition to deployment capabilities, FastDeploy 2.0 integrates a range of quantization techniques. With a single command, users can enable weight-only 8-bit, 4-bit, or FP8 online quantized inference. Static W4A8 quantization is also supported. Built on deeply optimized CUTLASS kernels, the system dynamically selects the most suitable quantization strategy based on model architecture and hardware platform.

FastDeploy 2.0 also introduces a high-performance, modular speculative decoding framework. Performance is further enhanced through kernel-level fusion for pre/post-processing, dynamic batching, parallel verification, and virtual padding to accelerate token validation. The system is fully compatible with context caching, Prefill-Decode Disaggregation, Expert Parallel, and chunked prefill.

For MTP (Multi-Turn Prompt) inference, FastDeploy supports logical and physical address separation of the KV cache to enable context caching across various layers of both the target model and the MTP module. Prefill-Decode Disaggregation is also applied to MTP communication to reduce overhead and improve end-to-end inference throughput.

Additionally, FastDeploy 2.0 provides optimized CUDA Graph support. With PaddlePaddle’s dynamic-to-static conversion, the toolkit enables both static and dynamic graph capture. In testing with lightweight ERNIE models, decoding speed was improved by more than 2 times on average.

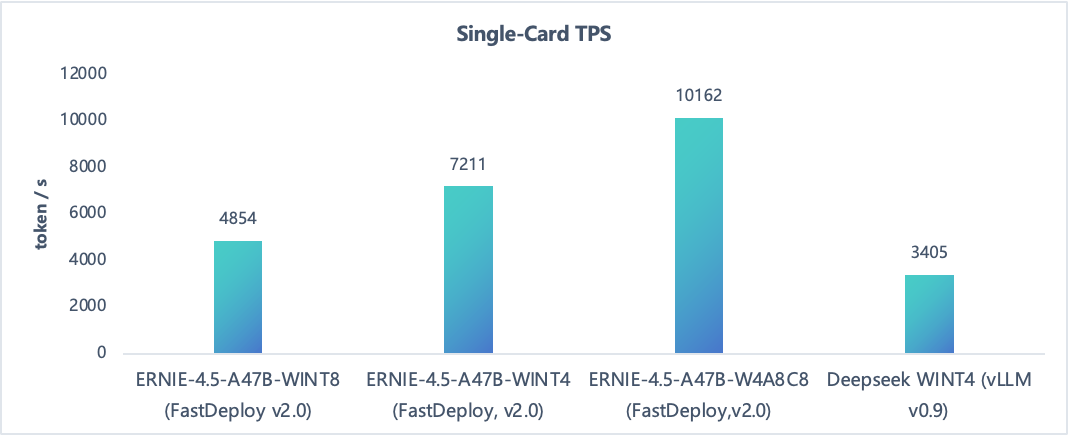

Single-Node Deployment of ERNIE-4.5-300B-A47B

FastDeploy supports weight-only 4-bit inference, enabling deployment with as few as 4 GPUs while maintaining near-lossless accuracy. When deploying ERNIE-4.5-300B-A47B model on a single-GPU, it delivers approximately 23% higher QPS compared to full-weight 8-bit inference, reducing hardware requirements and improving efficiency at the same time. To further optimize MoE quantization, FastDeploy introduces W4A8 quantization along with 8-bit compression for KV cache, resulting in an additional ~40% QPS gain. On a single H800 GPU, the achieved TPS outperforms the 8-GPU vLLM deployment of WINT4 DeepSeek by 198%.

Single-Node Deployment of Lightweight ERNIE 4.5 Models

Under the condition of 1.1K input sequence length, the A3B model on an H800 GPU maintained TPOT within 25 ms, while the 0.3B and 0.6B models on an A30 GPU kept TPOT within 16 ms.

Compared to deploying Qwen3 models of lightweight scale, FastDeploy demonstrated notable performance advantages by achieving 5% and 12% higher throughput than vLLM. For lightweight ERNIE 4.5 MoE models, throughput outpaced similar-scale Qwen3 models by 99% and 118%, respectively— highlighting FastDeploy’s efficiency on small-scale MoE deployments.

2-Bit Quantization

PaddlePaddle’s 2-bit quantization approach reduces MoE weights from BF16 to 2-bit, significantly lowering memory fingerprint and deployment resource requirements during inference. For the 300B-parameter ERNIE-4.5 model, this method compresses weight storage from 600 GB to 89 GB, enabling deployment on a single 141 GB NVIDIA GPU.

The 2-bit quantization is based on convolutional encoding and inherits ideas from Trellis Code Quantization and Bitshift Trellis, while introducing substantial improvements to the codebook design and encoding algorithm. Such enhancements reduce quantization loss and further improve inference efficiency.

Compared to conventional scalar quantization, PaddlePaddle’s method achieves better accuracy; compared to traditional vector quantization, it delivers faster inference speed. Quantized ERNIE-4.5-300B-A47B models retain near-lossless accuracy across multiple benchmark datasets.

| Test Set | IFEval | BBH | DROP | GSM8K | CMath | CMMLU |

|---|---|---|---|---|---|---|

| WINT4 | 88.17 | 94.43 | 91.17 | 96.21 | 96.50 | 89.92 |

| WINT2 | 85.40 | 92.02 | 89.97 | 95.98 | 96.00 | 86.22 |

Large-Scale Distributed Inference

FastDeploy enables large-scale distributed inference for MoE models through Expert Parallelism (EP). It currently supports a fully separated deployment of the ERNIE-4.5-300B-A47B model.

Dispatch and combination operations across devices are handled by FastDeploy’s DeepEP engine, which manages both intra-node and inter-node communication. The low-latency mode of DeepEP has been further enhanced through a two-stage optimization process, resulting in a 2 times improvement in communication performance.

The strategy can be summarized as follows: when the expert index maps to a local GPU, data is transferred via NVLink. When the target expert resides on a remote machine, a two-stage transmission is employed—first via RDMA to the corresponding rank on the destination machine, and then via NVLink to the target GPU.

In practice, however, implementing low-latency mode poses challenges. To avoid introducing CPU-side synchronization, metadata cannot be sent in advance, resulting in increased complexity at each communication stage. Meanwhile, due to the involvement of intra-GPU, inter-GPU, and inter-node transfers—each with coupled data directions—naive memory ordering can cause performance degradation. Proper handling of memory consistency becomes critical.

To address these challenges, FastDeploy implements metadata-free signaling through a kernel-level design using three layers of atomic semaphore mappings across seven signaling types. For memory consistency control, FastDeploy employs fine-grained ordering mechanisms across the SM, GPU, and system layers. This ensures accuracy while minimizing performance overhead during multi-stage data transfers.

To support efficient KV cache transmission, FastDeploy includes a lightweight, custom-built RDMA-based communication library that requires only a basic RDMA runtime environment. The library supports both NVIDIA GPUs and KUNLUNXIN XPUs, and is designed for ease of deployment.

Unlike existing solutions, FastDeploy’s implementation introduces several enhancements to improve performance, including a reduced number of CQEs and support for PCIe Relaxed Ordering. In benchmark tests using Mellanox ConnectX-7 400G NICs, both FastDeploy and Mooncake implementations fully utilized bandwidth under multi-threaded load, approaching the theoretical hardware limits. In single-threaded scenarios, FastDeploy achieved a 1.1 to 6.9 times higher throughput compared to Mooncake.

The ERNIE-4.5-300B-A47B model, when optimized with W4A8 quantization, KV cache quantization, communication improvements, KV cache transfer optimization, and MTP speculative decoding, reached 50 ms TPOT on H800 hardware for 2K-input / 400-output sequences, with throughput of up to 56K/21K tokens per second. The data represents a 17% improvement in output TPS over the baseline reported in the original ERNIE 4.5 technical report.

Real-Time Load-Aware and Distributed Load Balancing Scheduling

Inference tasks typically involve varying input lengths, with output lengths tied to the specific task, making them difficult to predict in advance. Most open-source scheduling solutions rely on round-robin or input-length-based approaches, which fall short of ensuring global load balancing for dynamic outputs in inference clusters. FastDeploy leverages a dedicated cache server to enable real-time global load awareness and a distributed load-balancing strategy. This feature can be activated directly via startup parameters without separate deployment, significantly improving cluster throughput and Time to First Token (TTFT) metrics.

- In hybrid PD deployment scenarios, the scheduler employs a proactive pull-based approach combined with a Work Stealing strategy for load balancing. Each inference instance maintains a task queue in the cache, allowing idle nodes in the cluster to “steal” tasks from high-load nodes for processing. This effectively mitigates long-tail load issues, boosting overall service performance.

- In Prefill-Decode Disaggregation(PD) scenarios, the scheduler operates as a distributed system. Inference instances register their information in the cache and periodically report real-time load to update their status. The scheduler monitors cluster load in real time via the cache. During scheduling, it first identifies a set of low-load nodes based on the input token count of the inference request, then randomly selects a node from this set for task assignment. This approach minimizes load fluctuations caused by multiple schedulers directing requests to the same instance within a single synchronization cycle.

Multi-Hardware Inference Deployment

Most deployment tools for large models in the open-source community primarily support NVIDIA and AMD GPUs. FastDeploy not only optimizes for NVIDIA GPUs but also comprehensively addresses diverse hardware deployment needs. FastDeploy introduces a hardware adaptation layer that abstracts the underlying compute infrastructure, provides a unified model invocation interface, and efficiently supports various device backends. The layer includes kernel implementations in CUDA, Triton, and C++, among others. Currently, it enables high-performance inference on a wide range of hardware, including KUNLUNXIN P800, Iluvatar BI-V150, Hygon K100AI, and Enflame S60 (see examples later for specific usage details).

Getting Started with FastDeploy 2.0

Local Offline Deployment Example

from fastdeploy import LLM, SamplingParams

sampling_params = SamplingParams(top_p=0.95)

llm = LLM(model="ERNIE-4.5-0.3B")

outputs = llm.chat(messages=[{"role": "user", "content": "Write me a poem about yourself"}], sampling_params)

Service-Based Inference Deployment Example

- Launching the service with a single command

python -m fastdeploy.entrypoints.openai.api_server --model baidu/ERNIE-4.5-0.3B-Paddle --max-model-len 32768

- After launching, request the service using the following commands:

curl -X POST "http://0.0.0.0:8180/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{"messages": [{"role": "user", "content": "Write me a poem about yourself"}]}'

Once the service is up and running, you can easily integrate with existing tools and workflows using the OpenAI-compatible API provided by FastDeploy 2.0. For detailed documentation, installation guides, and advanced configuration options, please refer to the FastDeploy 2.0 repository and documentation.

Multi-Hardware Efficient Deployment Example

As an example, deploying the ERNIE-4.5-300B-A47B-Paddle model on KUNLUNXIN P800 hardware can be done by following these steps:

# Step1. Create and access the container using a precompiled image.

mkdir Work

cd Work

docker pull ccr-2vdh3abv-pub.cnc.bj.baidubce.com/paddlepaddle/fastdeploy-xpu:2.0.0

docker run --name fastdeploy-xpu --net=host -itd --privileged -v $PWD:/Work -w /Work \

ccr-2vdh3abv-pub.cnc.bj.baidubce.com/paddlepaddle/fastdeploy-xpu:2.0.0 \

/bin/bash

docker exec -it fastdeploy-xpu /bin/bash

# Step2. Launch an OpenAI API-compatible service based on the ERNIE-4.5-300B-A47B-Paddle model.

export XPU_VISIBLE_DEVICES="0,1,2,3" or "4,5,6,7"

python -m fastdeploy.entrypoints.openai.api_server \

--model baidu/ERNIE-4.5-300B-A47B-Paddle \

--port 8188 \

--tensor-parallel-size 4 \

--max-model-len 32768 \

--max-num-seqs 64 \

--quantization "wint4" \

--gpu-memory-utilization 0.9

# Step3. Send a sample request using curl.

curl -X POST "http://0.0.0.0:8188/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{"messages": [{"role": "user", "content": "Where is the capital of China?"}]}'

Resources

- Github Repository: FastDeploy

- ERNIE 4.5 open models